Early efforts in Artificial Intelligence

Written by Sil Hamilton in December 2023.

Happy end-of-2023! That was a busy year. AI dominated the conversation.

But here’s the problem: the term is too nebulous. What is AI? Business leaders mistrust it, venture capitalists and their investment targets misrepresent it, and others fear it. I think much of this consternation comes from a general unfamiliarity with what artificial intelligence actually is. Using the term in a public setting invokes all sorts of falsehoods and misapprehensions. It’s become fashionable for certain practitioners and researchers to avoid calling large language models AI to avoid this situation from aggravating.

I also like to avoid using the term in private and academic conversations. I’m far from the only one: Apple generally does not call "AI" features on their platform AI, instead opting for machine learning. Stanford’s CS224N: Natural Language Processing (NLP) with Deep Learning differentiates between NLP and AI research. To call ChatGPT an “AI” is not accurate, not by a long shot. But it is true we are in a golden age of machine learning. I find large language models are amazing; I’ve been studying them for five years and expect to continue studying them for a long time to come. But they are not, in and of themselves, examples of “artificial intelligence.” Language models do what we trained them to: they predict language. There’s a difference between that and an agential system grounded in a physical world like our own. Language models are a tool traditionally used in computational linguistics, not in pursuits of algorithms employing AI.

But it is likewise disingenuous to say NLP and AI research are not interlinked at some level. Their histories are closer than one might imagine—and so are the histories of mathematics, linguistics, and philosophy! I thought it’d be nice to end this year with a retrospective on early artificial intelligence efforts. This retrospective will take us through the origins of natural language processing, information theory, lambda calculus, early mainframes; all technologies instrumental to the rise of what some now call AI. Understanding it helps clear the air with what ChatGPT is (for those who are wondering), and how it figures into the grand scheme of things.

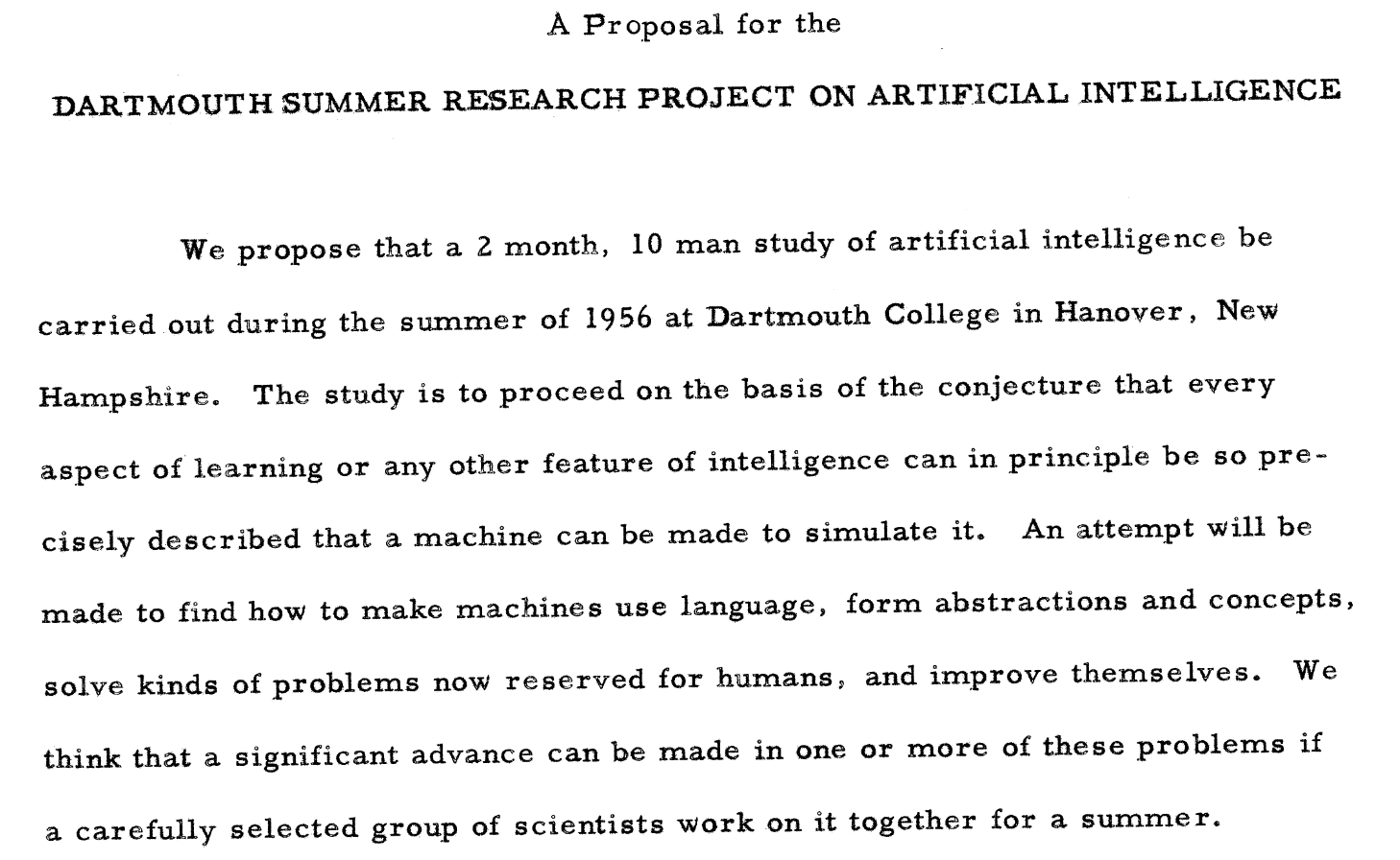

I’ll begin with the term itself. When was “artificial intelligence” coined In the summer of 1956 mathematician Claude Shannon and early computer scientist John McCarthy convened a number of leading researchers at Dartmouth College to workshop what they were calling artificial intelligence. What, in their opinion, was artificial intelligence? The proposal gave AI the working definition, referring it to as “the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can […] simulate it.” See the proposal here. It’s a great read.

Figure 1: A portion of the Dartmouth Workshop proposal. Note the focus on having computers understand language.

This workshop did not come out of nowhere. It was firmly embedded in research pursuits already active for years. Academics at MIT had already been researching “computer learning” on their mainframes (primarily the IBM 704) for some five years, and 1951 had seen Claude Shannon announce his “language model”—identical to modern implementations at least in principle. Hopes of a breakthrough were high, and so the researchers thought to label the goal: artificial intelligence.

They held the workshop during a time busy with the unveiling of universal theories purporting to unify whole practices. See lambda calculus for mathematics, the standard model for physics, universal grammar for linguistics, and so on. The relative success of the Macy Conferences (where McCulloch and Pitts unveiled the first artificial neuron) suggested researchers interested in human behaviour would likewise soon uncover some fundamental truth governing intelligence. And so the workshop brought together researchers of two types: information theory and computation.

Information theory has its roots in the second world war, where governments incetivized mathematicians to find ways to decrypt enemy communications. His experiences working for the American government inspired mathematician Claude Shannon to publish his 1948 two-part memorandum on “a mathematical theory of communication” wherein he argued understanding communication did not require analyzing what any given message said, but rather what it did not say. To pick one word over another implies a particular decision, a particular logic. Reversing this decision is not only an efficient way to decode a message, but to understand the fundamentals underpinning that decision.

Shannon’s holistic understanding of communication finds a parallel in the philosophy of linguist Ferdinand de Saussure (1857-1913), one father of linguistics. Saussure split the word into that which is the literal word itself, that being the letters constituting the word itself—the signifier and that which the signifier is signifying, the target of the word—the signified. Saussure broke ground with this distinction. It implies the actual character of the word, the letters making up a word, are arbitrary and thus only defined in opposition to others word-constructs when considering a language as a whole. The letters comprising the words “cat” and “dog” do not intrinsically reflect their targets. I know the difference, of course, and so I pick one over the other depending on the context. Claude Shannon took this distinction to new heights.

Let’s take English as an example. Our script has 52 letters, then an additional ten commonly used letters of punctuation. None intrinsically refer to their signifieds, and indeed, they only invite us to comprehend them when grouped together in words and sentences. Claude Shannon made two observations. First, that not all letters are equal. The vowels are the most common letters, and consonants typically cannot follow other consonants. There is a rhyme and reason to language. Where does this rhyme and reason come from? This was his second observation. Our knowledge of the world, he says. Words have meaning, signifieds, and forming coherent sentences requires those participating in a conversation to share a persistent understanding of the world. After all—if signifiers, words, are in and of themselves arbitrary, it is only in active communication that meaning can arise.

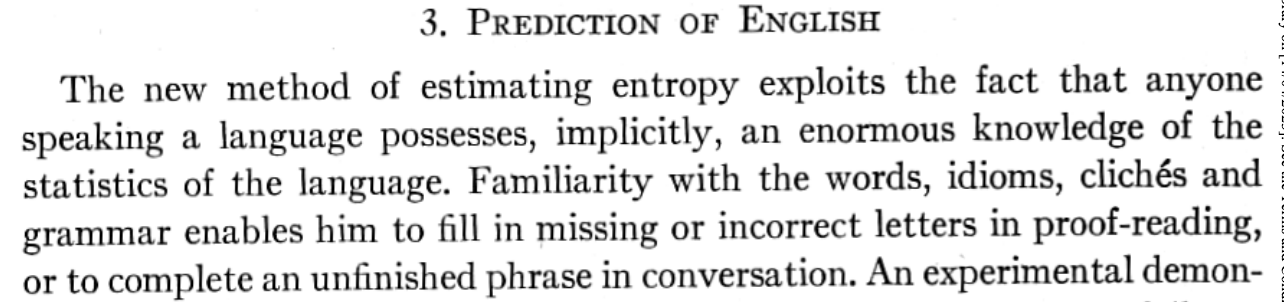

Figure 2: From Shannon’s 1951 Prediction and Entropy of Printed English.

But here was where Claude Shannon made his mark. His idea of information, or entropy, suggested meaning also works vice versa. If you were to have a system (say, a computer) study and memorize the probabilities governing how words follow one another, their state transitions, then your system would begin to understand the rules governing those transitions. Your system would intuit the signified associated with each signifier. This was a revelation. Just like you might compress a computer file, language models compress knowledge. Compression, as it turns out, is equivalent to some form of intelligence.

This is decently well recognized today. “ChatGPT is a blurry JPEG of the web,” wrote science fiction author Ted Chiang for The New Yorker this year. “Being able to compress well is closely related to intelligence,” argues Marcus Hutter on the web page for his “500,000€ Prize for Compressing Human Knowledge” circa 2000. Shannon and his contemporaries recognized this, and so language learning was a major focus for those attending the Dartmouth Workshop.

The other organizer of the event was John McCarthy, an assistant professor at Dartmouth; research fellow at MIT; and full professor at Stanford. He is now principally known for the programming language LISP (standing for List Processor), which he defined in a twelve-page paper entitled “Recursive Functions of Symbolic Expressions and Their Computation by Machine.” LISP is a simple language, taking advantage of recursive techniques borrowed from lambda calculus to produce complex behaviour. Lambda calculus was a formal system for expressing a universal model of computation developed in the 1930s by Alonzo Church—a notation set for describing computation in arbitrary symbolic languages. Where are symbolic languages found? Programming languages and natural languages.

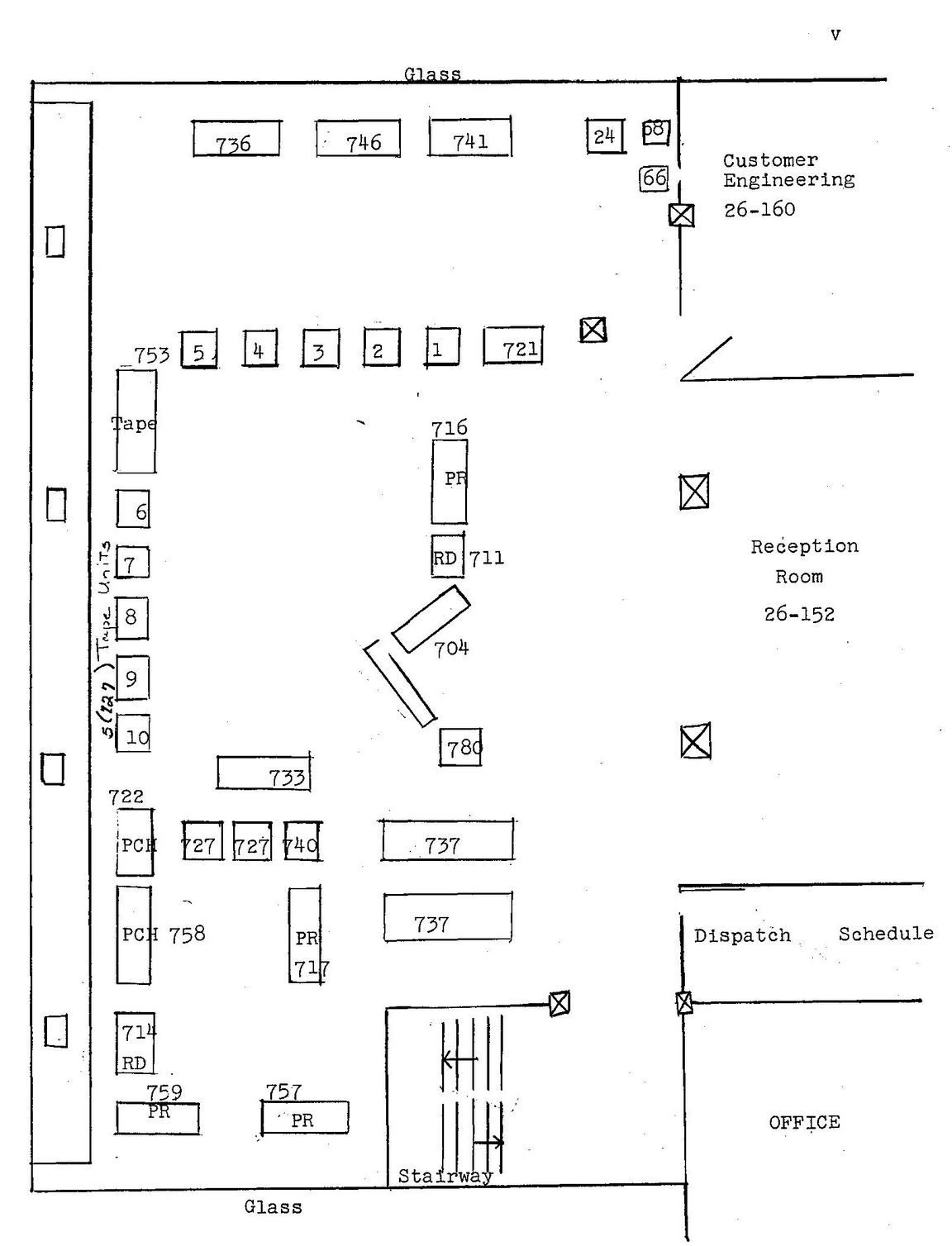

McCarthy implemented LISP on a IBM 704 purchased by MIT. It was physically large and slow to interface with. It was nonetheless influential in early artificial intelligence research thanks to LISP. Here is a MIT manual uploaded to the Internet Archive concerning how to use the mainframe. Highlights include page 12, which records the storage apparatus available to users. Details on the compiler are on page 140. Mainframes were slow—slow enough to hamper early efforts to implement the neural networks described during the Macy Conferences. Neural networks, like those found in large language models, would not be tenable until the 1990s.

Figure 3: Floorplan of MIT’s IBM 704. Big!

Speed and memory were both concerns. Concurrent with the IBM 704 was the MIT-grown TX-0, developed to provide researchers with a larger amount of memory. MIT students would use the TX-0 to study artificial intelligence up through the 1960s. The Internet Archive thankfully records these efforts, and so I’ve gone through these uploaded documents to pull out highlights. Games were common. Researchers put effort in developing ways to have machines teach themselves, similar to reinforcement learning today. Also common were analogies to mice. Claude Shannon developed an “electric mouse,” and MIT researchers developed the MOUSE program whose only goal was to navigate a virtual maze in cyberspace. They hoped computers would be able to teach themselves the ways of the world.

The Dartmouth Workshop was where efforts in cryptography, mathematics, and linguistics combined forces to find a path to actualizing artificial intelligence. It was a multidisciplinary effort. Those attending found common elements in each constituent practice—compression is intelligence is language understanding. This is perhaps best recorded in Noam Chomsky’s famous hierarchy of grammars, wherein he differentiates regular languages (finite state automata); context-free languages (non-deterministic pushdown automata); context-sensitive languages (linear-bounded non-deterministic Turing machines); and recursively enumerable languages (Turing machines). This hierarchy unites programming and natural languages, and so it should perhaps be no surprise computational linguistics was where our first “truly successful” examples of artificially intelligent computer programs emerge from.

And maybe that’s the way to go about it. I dislike calling ChatGPT an “artificial intelligence.” It is not an it. It is closer to a simulator, or perhaps a system modelling "semantic laws of motion." Using artificial intelligence as a noun invites discussions of what it means to be intelligent, what the boundary is, and so on. It is better to skip the debate and refer to systems as being artificially intelligent, or to refer to it as a property or goal of a research program. I think large language models are promising. I spend my days studying them. Through them I study all sorts of subjects from pure mathematics and language acquisition to analytic philosophy and neuroscience. Calling large language models “AI” cheapens them; I think it distracts, confuses, and emboldens people in the wrong dimensions. Who remembers the Turing test?